Can we ever understand what cats want? Or why AI pronouncements can sound like divine commandments? To what and to whom do we bear responsibilities and obligations? In Animals, Robots, Gods, Webb Keane explains that our answers offer clues for understanding our closely held ethics. Where philosophers and ethicists often plumb individual minds and societies to understand morality, Keane’s ethics rollicks with relationships, interactions, and situations. Our interview ranged from questioning the individualistic moral framework of the famous trolley problem, to the ethics of oracles, to the ways that AI shapes human perceptions of our own agency.

Keane, a cultural and linguistic anthropologist, is the George Herbert Mead Distinguished University Professor at the University of Michigan.

Caitlin Zaloom (CZ): Your book, Animals, Robots, Gods, suggests that we can best understand morality by examining interactions between humans and nonhumans. Have you had an experience like that in your personal life? Did you grow up with a pet?

Webb Keane (WK): I grew up with cats. I’ve always found them to be interesting social companions. Notoriously, they don’t just give you unquestioning loyalty and devotion. And yet there’s also this intriguing flow of signals as we communicate with each other. More generally, animals like cats, dogs, and horses are entrées to the question of social relations because they exist at the edge of the human.

Recognizing your own limits—as cats force you to do—requires taking the reality of other people seriously. The two are bound up with each other. What fascinates me about social interaction is that it involves always a kind of reflection. In order to communicate with you successfully, I have to be able to see myself through your eyes. This looping is crucial. I not only try to come to know you better, but in the process, I see myself from a different angle.

In my previous book, Ethical Life, I discuss how moral development depends on the ability to switch between first- and second-person perspectives. Children learn at an early age how to shift between “I” and “you,” which requires understanding how they appear to others. In all languages, children learn this at a very early age. This ability to shift perspectives is a fundamental precondition for the eventual development of moral awareness.

CZ: What makes this shift specifically a moral issue?

WK: It’s an affordance for the capacity to step outside of yourself and see from another’s eyes, especially their long-term concerns. Even when we quarrel, we’re engaging in a shared act. We’re collaborating on the disagreement.

CZ: That reminds me of a favorite observation of Georg Simmel’s—that competitors are actually deeply connected.

WK: Exactly. Competitors agree on what matters; they’re not just engaged in parallel play. The alternative is mutual indifference.

CZ: Recognizing an “I” and a “you” as foundational to morality also opens the door to manipulation. If you understand someone’s perspective, you could use that knowledge to dominate them. How do you see that dynamic?

WK: The most effective forms of domination come from understanding others, more than from brute force. There are vicious forms of interaction and domination that draw on the same affordances that virtuous ones are.

CZ: This simultaneous capacity for virtue and violence brings me to the trolley problem, which you write about. Can you explain the trolley problem and your critique of it?

WK: The trolley problem was concocted by two philosophers in the mid-20th century as a thought experiment about moral judgment. The classic version presents a runaway trolley heading toward five people. You have the option to pull a switch and divert the trolley onto another track where only one person stands. Most people say they’d pull the switch because saving five at the cost of one is mathematically justified.

But then comes the nasty variation: Instead of a switch, you’re on a bridge with a very large man. Pushing him onto the tracks would stop the trolley, saving five people. The utilitarian calculus is identical—the outcome, five lives saved at the cost of one. Yet when researchers run this past people, they get a lot of resistance—most folks don’t want to push him. This resistance exposes a flaw in purely utilitarian reasoning.

CZ: So the distinction lies in the physical act itself?

WK: Partly. To push someone’s body is more intimate than pulling a switch. You might want to say, well, that’s psychology. But there’s a more distinctly ethical aspect. When you pull a switch, the man’s death is an unfortunate side-effect but it’s not what saves the other people. Had there been no one on that track, the diversion would still have saved them. But when you push him off the bridge, he has become the means to an end. Treating a person instrumentally can feel just wrong to many of us.

CZ: Your book raises questions about the cultural specificity of the trolley problem. What makes it so compelling?

WK: The trolley problem assumes a very Western, individualistic notion of agency. It places a single decision-maker in a vacuum, disconnected from social ties. Anthropologists have found that when posed with similar dilemmas, people in some societies reject the premise outright. They insist on knowing who the people are, what their relationships are. In Madagascar, for example, people just wouldn’t play along, because the problem ignored social context. They refused to treat the lever-puller or the endangered people as abstractions.

This finding resembles a classic study by the psychologist Carol Gilligan. She found that while boys saw hypothetical moral dilemmas as interesting logic puzzles, girls were more likely to ask about relationships among the protagonists. But the trolley problem forbids that kind of thinking. It places an enormous amount of weight on cleverness of the person making the decisions and isolates moral reasoning in a way that many people, rightly, find unhelpful.

CZ: When did you start to ask questions about your own sense of morality?

WK: As a child I went to a Quaker school in Manhattan. These New York Quakers were militant egalitarians. One of the things they taught me is that all people are created equal. But as I grew older, I started to notice that it can’t possibly be true that all people are equal. Look around you! Some are tall, some are short, some are rich, some are poor, some are happy, some are sad, some are healthy, some are unhealthy. And I grew up not far from the Bowery; homeless people were always part of the street scene. At an early age I began to wonder why they were there in the street instead of me, and vice versa. Eventually this question leads to the sociological one: What forces or structures bring this about? And to the moral one: What kind of society finds this tolerable?

It wasn’t until much later, when I started working in the anthropology religion, that the other shoe dropped. Quakerism as I knew it was very secularized, but it is Christian at its roots. And I had forgotten this part of their teaching: It’s not that all people are created equal; it’s that all people are created equal “in the eyes of God.” It takes a transcendental perspective to see that equality. You can find this “third-person” viewpoint in religion but also in secular versions like the ethical thought of Kant or Rawls.

But I argue that the third-person point of view can’t be all of ethics—it might tell what, in principle, is right, but it can’t tell you why I should care. For that we need to include the dynamics between first and second person, between me and you. I’ve come to understand ethics as a constant negotiation between and movement among these different perspectives.

CZ: That brings us to AI and the ethical position it seems to occupy. Why do you think artificial intelligence evokes such awe?

WK: AI powered chatbots, for instance, have an uncanny quality—they give responses that seem to come from nowhere. Given the vast complexity of LLM training and reinforcement learning, even its creators don’t fully understand exactly how ChatGPT and the rest generate the specific responses they give to prompts. AI possesses “knowledge” (or something that looks like it) at a superhuman scale. And it’s utterly disembodied. These properties tempt people to see AI as almost supernatural, even if they know better. Ironically, many tech leaders—self-described rationalists—use religious language to describe AI. Elon Musk has called it “godlike,” others say it “has a soul.” Ray Kurzweil’s idea of “the Singularity” says that end times will be marked by a dramatic sudden and absolute rupture in reality. Everything will be utterly and totally transformed. This is straight out of the apocalyptic tradition—tweak it a bit and you’ve got the Book of Revelation. Once you start looking, you’ll see these quasi-religious responses to AI in all sorts of unexpected places.

CZ: Some AI interactions make people feel like gods, while others make AI itself seem divine.

WK: When I wrote an essay about this with the legal philosopher and computer scientist Scott J. Shapiro, we coined the expression “godbots.” This refers to chatbots that take that basic theological instinct and treat it in literal-minded terms, as a means of accessing the divine. There are godbots that will answer your theological questions for Hinduism, Islam, Christianity Sikhism, and Buddhism. What is it about AI that invites you to make this move? As I suggested, it’s uncanny. When you don’t know why it says what it says, possesses seemingly superhuman capacities, mimics human intelligence, and is disembodied, it has many of the properties of a god, and sees things from the god’s eye perspective. Interactions with AI have many of the semiotic properties that communications with the gods often have. And so users are likely to grant it enormous authority.

As a result, it’s not surprising to see people impute oracular powers to chatbots—even to feel that they know me better than I know myself. One experimental bot was trained by crowdsourcing answers to moral problems. The bot is named after the Delphic Oracle of Ancient Greece, a priestess in the temple of Apollo who would go into a trance. You would ask the oracle about your hardest problems, and she would give a response that was understood to come from the god. So this bot is really playing with the line between AI and religion. It’s yet another example of how tempting it is to think along these lines.

CZ: I’m struck by the close connection between instruments and moral agents.

WK: Think back where we started off with cats and dogs. Tools can be an extension of your human capacities. That is partly what Donna Haraway meant when she said that humans are cyborgs. Any tool can give us a cyborg-like character. I tell a story in the book about a pastor who told Siri to turn on the lights in his house. Suddenly he was struck that he’d replicated God’s first commandment in the Bible, saying “Let there be light” and there was light. You might say he merged with the tool. But he’s still calling the shots.

But what if tools become more powerful and develop those semiotic features that we identify with human agency like the capacity to use language? At this point they provoke the worry that they will become autonomous of us.

CZ: Yes, autonomous and dominating.

WK: We’re not there yet. The intermediate step is important. Anthropologists can recognize this as fetishism, which is when humans create a device or a tool and imagine that it possesses the powers that in fact are projections of our own. The danger of fetishism isn’t that it’s a mistake, it’s that in the process we come to misrecognize ourselves, granting authority to something we have created and thereby robbing us of our own. I think right now that’s the stage that we’re at with AI. The devices are not wholly autonomous of us, but we are starting to lose a sense that we made them. “We,” in this case, refers both to the human resources they are trained on, and the corporate powers that profit from them.

CZ: How is that a moral issue for you?

WK: Some moral philosophers debate whether AI should be granted moral status. They are asking, “Is there a point where humans will treat AI as moral agents to whom we owe certain moral obligations?” There’s debate about what the criteria would be. Would the AI have to be sentient? Would they have to be capable of suffering pleasure and pain? If so, they would be subject to standard utilitarian calculus.

I’m more interested in how AI troubles the line between human and nonhuman in ways that don’t just raise questions about them but raise questions about us. That’s why I stress the echoes between some ways people respond to AI and our long history of making oracles and ways to access the divine.

But there’s another side to the question too: how we determine who or what counts as “close enough” to human to be ethically relevant. This question too has a long history. Enslavers once expelled enslaved people from the circle of moral consideration altogether. Today, arguments about inclusion in the moral circle often revolve around the rights of animals. New technologies are now posing questions that we keep encountering in different forms and contexts across time and space. Indisputably there is a lot in AI that is unprecedented and dramatically new. But we can’t fully grasp what’s new about it unless we also understand what we’ve seen before. ![]()

This article was commissioned by Caitlin Zaloom.

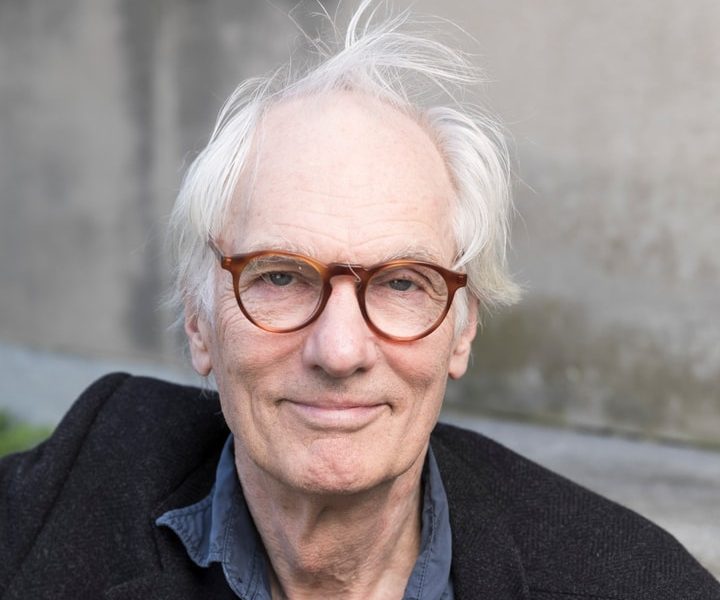

Featured image: Webb Keane. Used with permission of Penguin Press.